1. Introduction

Never before the world of architecture has been producing and consuming data. Data that generate architectures and architectures that generate data. Information from the real world are acquired, aggregated and transformed (approximated) by digital tools that operate by applying a process strongly linked to the subjectivity of those who guide the process (Popper, 1969).

The spread of surveying technologies, allowing the massive acquisition of data, has had a significant impact on the discipline of surveying, partially reversing the traditional process. The new tools available actually shift the discretionary phase of the survey project to the data processing phase. Data are thus collected extensively, reducing or withdrawing the primary phase related to the interpretation by the surveyor, and are instead configured as raw data ready for multiple interpretations (synchronic or diachronic). Moreover, the current evolution is leading to a large application of BIM - Building Information Modelling working in a parametric environment, and fostering standardization and the replicability.

Within this process, geometric data enrichment through the aggregation of information is essential, giving entities that go beyond the mere anatomical representation (Centofanti, 2010) and making explicit semantic and ontological correlations that allow a full understanding of the architectural organism (Maietti et al., 2020).

Opening up to multiple interpretations of the surveyed data, requires the definition of a structured methodology (Bianchini, 2014) that drives the process according to rules that allow defining (according to a case-by-case degree of approximation) the level of reliability (LoR) of the represented three-dimensional geometry. To the data is then added the “Paradata” (The London Charter[1]), which traces the choices made by each operator during all the processing phases.

The geometric information collected and processed, organised in a parametric spatial matrix, act as a synoptic basis for the aggregation of further information levels. Born-digital data or digitised data find their own location in a discrete environment that combines them and makes explicit their correlations, creating a “complex representative model” (Centofanti, 2018).

Complexity, made usable by its organisation within a discrete model, comes in a shared digital environment that allows multiple actors to access and act on the information collected punctually and selectively, expanding investigation opportunities.

The research aims to propose a possible methodology for data management able to foster digitisation processes in the intervention on the existing building stock in Emilia-Romagna.

The digitisation of processes has been a widespread trend in the construction field for a long time (Negroponte, 1972), extending from the planning phase to cover the whole construction life cycle. This is even more the case when working on new buildings, where regulations[2] (European, national, or regional) recommend or impose intervention guidelines to achieve EU objectives regarding land consumption, sustainability, and energy saving.

Limited to the Emilia-Romagna region, the research context is rich in a very extensive 20th-century building heritage.[3] Nevertheless, it requires adaptation works such as restoration of materials and components, plant upgrading, roofing systems renovation, or structural reinforcements due to age or need (for instance, subsidence or seismic risk).

Working in terms of digitisation of processes applied to existing buildings requires the definition of procedures that consider the needs of all the operators involved, either being owners, officials, contractors, component manufacturers or technicians.

To support the implementation of innovative processes in a sector such as construction (OICE[4]) in the Emilia-Romagna territory – mainly made up of small and medium-sized enterprises historically unwilling to adopt a particular type of technological shift –, it is necessary to provide tools and methodologies simplified in use, immediately adoptable and able to help operators understand what added value they can gain from them.

The digital component in architecture is supported by the expanding availability of hardware and software tools offered at increasingly favourable costs. These tools’ output represents a data source widely used during all phases of a building’s life.

For instance, a network of temperature sensors (RICS, 2014) housed in interconnected thermostatic valves (Wired/Wireless) semi-autonomously regulate the temperature inside a building. Such sensors collect data processed by a control unit that operates according to sets of instructions created using unique calculation models to maintain constant indoor comfort when external conditions vary while containing costs. Like much more complex ones, similar automation processes are becoming increasingly popular as being supported by the value they generate compared to the investment for their implementation (energy efficiency in this case).

The data management and use become value. Processes that previously would have required the efforts of several professionals are now carried out semi-automatically, in real-time.

Unfortunately, devices (e.g., Sensors) interconnected via infrastructures (Wired/Wireless - E.g. A wi-fi network connected to a data collection system) are necessary to generate data. Such infrastructures require to adopt architectural arrangements which should be planned in the design phase (in case of new constructions), otherwise obtained during the redevelopment process as in the case of intervention on existing buildings.

2. State of the Art

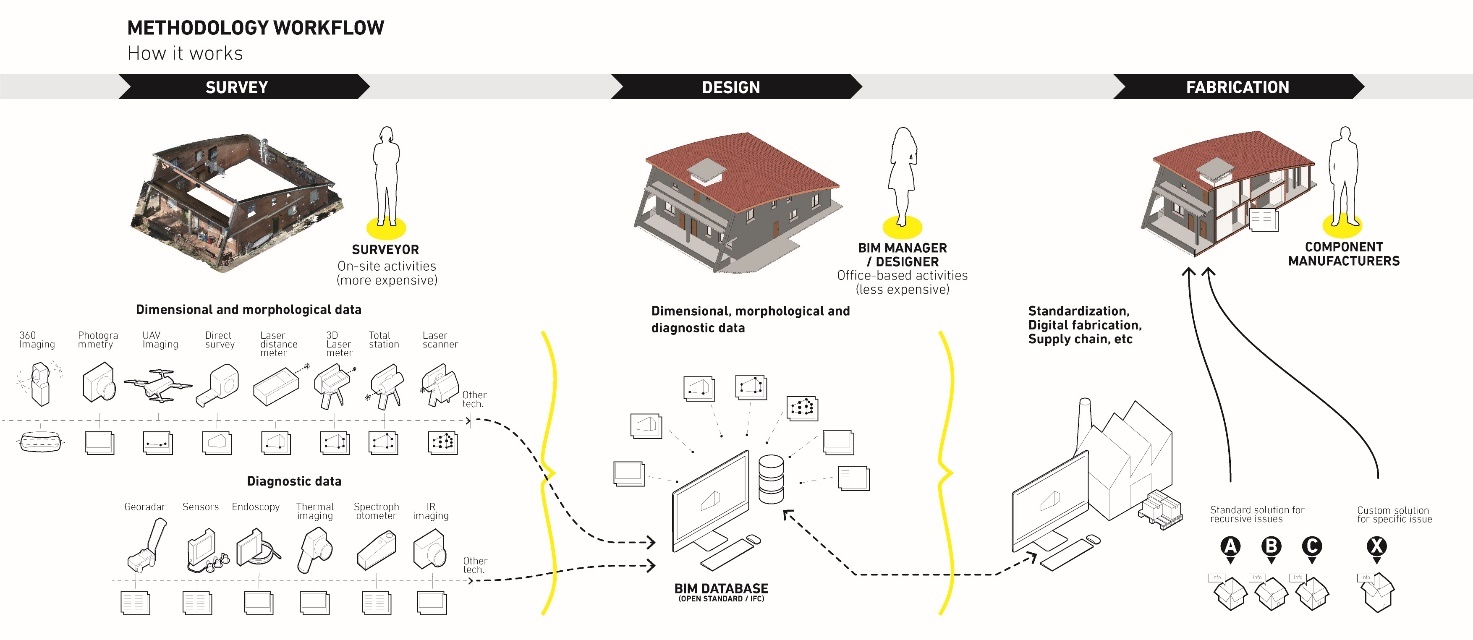

In the field of intervention on the built environment, the data defining state of the art are “created” through a multidisciplinary investigation process (Fig. 1). This analytical method aims to create multiple datasets (even of a very diverse kinds) handled through specific processes aimed at transforming data into information.

Besides dealing with the conversion of a large amount of data into information, this process generates information itself. This information, called paradata,[5] represents the trace of the discrete method used for the transformation and lays the foundations for the ontological organisation of the collected information, guiding the process towards the correct understanding of the object (De Luca et al., 2007). Thus, the obtained information is used to define a synthesis model representing the characteristic elements of the building and all the components that define it (structural, HAVC, to name a few) as accurately as possible (Fuller et al., 2020).

The data collection tools are now advanced technologies, constantly being developed by manufacturers, focusing on performance values and ease of use. Ease of use is a crucial factor, which is essential for broadening the user base and making their application possible in new fields.

For instance it is possible to mention the evolution of the terrestrial laser scanner, which has gone from being a complex object (Tucker, 2002) to a portable object that could be sold in an Apple store and controlled through an App[6] in the space of twenty years.

The SfM (Structure form motion) (Luhmann et al., 2013) procedure is also widely used. Considered to be one of the most promising technologies, it uses algorithms capable of sampling a series of two-dimensional images to generate a three-dimensional point cloud. This system makes it possible to use hardware developed for other purposes (e.g., DSLR cameras, Smartphones, or Action Cams, among others) and properly developed commercial software. This technology has such promising development horizons that even producers of traditional laser survey tools invest in the acquisition of the companies that develop these algorithms.

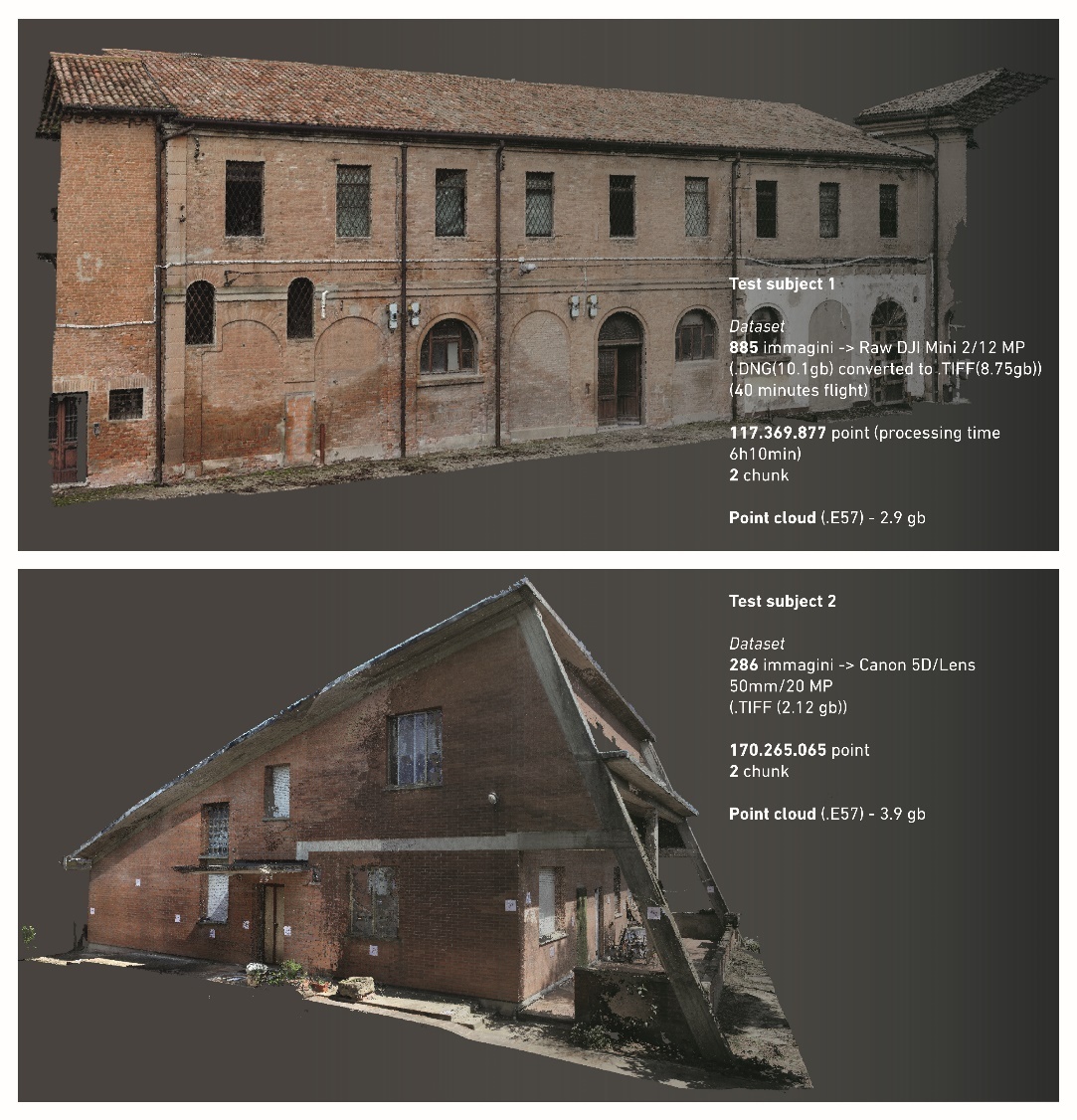

The two technologies described above provide a ‘point cloud’ as output. These points, described in a three-dimensional environment, include spatial information (X, Y, Z) and additional information such as the intensity value or the RGB value of each point. The limitation that does not currently allow the superimposition of the two technologies lies in the accuracy of the generated data. Today, the workflow of a survey carried out using laser scanner technology is still more reliable than a SfM (but also usually slower and more expensive) (Fig. 2).

A strength that can be attributed to SfM technology is using images acquired by consumer SAPRs (drones), which allow investigations in areas that are difficult to inspect with other technologies (roofs, crumbling buildings, large portions of land) with relatively low costs.

The laser/SfM survey makes it possible to obtain the data necessary to create an aggregate “point cloud” with a degree of accuracy defined according to the purpose, which is indispensable to creating a synthesis model of the object. The data accuracy and reliability become essential information for the subsequent phases (Fig. 3).

In addition to the morphological aspects, other effective data for in-depth knowledge of the case study are also collected. Stratigraphic data, thermographic data, temperature, or humidity trends (to name a few) contribute to defining not secondary informative levels, depending on the specific object needs. Heterogeneous in terms of output, these data require specific methodological steps to be converted into information (often through databases) to be used within a three-dimensional digital model.

The technologies used and the methodological process of required elaboration contribute to the definition of more or less substantial “intervention budgets”. Therefore, it is necessary to carefully examine which, when, and how to employ one of these technologies or more during the planning phase of the survey campaign.

Thus, the digital model becomes a synthetic container, created using a discrete method, set up to be as close as possible to the needs for which it was created (Bolognesi & Fiorillo, 2019). A massive data collection is not always a good practice. Excessive data (thence excessive information) can undermine computational systems that operate on technological apparatus based on limited resources. A too dense and heavy cloud is unusable by most workstations, just as a set of data from sensors can provide misleading indications.

3. Case Studies

The methodological process proposed by the research will be tested on case studies belonging to the existing built heritage in Emilia-Romagna. Among the indicators used to identify kinds and number of case studies, some criteria emerged from the ANCE[7] reports on the regional heritage that shows quite evident quantitative and qualitative trends in describing the building stock. Key indicators are linked to the period of construction, building technology and intended use. Identifying case studies belonging to these groups can contribute to the definition of a detailed methodology, covering a significant number of buildings with the same features and needs in terms of documentation, upgrading or adaptation. Once again, data collected and organised in databases[8] using filters can provide helpful information for decision-making.

Among the key elements needed to identify the case studies, it was decided to opt for buildings with a low number of co-owners. Difficulties in obtaining the required authorizations to carry out the necessary building surveys could have lengthened the research timeframe and undermined its effectiveness.

Therefore, it was decided to select case studies belonging to the Azienda Casa Emilia-Romagna (Acer). This crucial regional stakeholder owns several buildings whose characteristics perfectly cover the selected research criteria. Not of secondary importance the stakeholders’ willingness (Acer) to invest resources (dedicated staff time, infrastructure) for the heritage digitisation.

The COVID-19 pandemic slowed down the phase of defining individual case studies under the needs of the stakeholders. The restrictions imposed by the pandemic made any survey inside inhabited buildings impossible. Sample buildings (Fig. 4) were identified and used to define and test research methodologies to overcome this issue. The temporal uncertainty linked to the emergency duration highlighted how a working method based on the massive acquisition of data (point cloud) allows an operator to carry out synthetic modelling without the need to go to the site physically.

4. Research Methodology

The methodology adopted for this research is empirical/deductive, based on the validation of the process through a repeated approach.

In this research, the analysis of currently available methods for the data creation, management, and the definition of valuable procedures for their transformation (information/knowledge) represents the basis on which to articulate a methodological process that analyses and discretises the possible approaches helpful in solving the problem of technological permeability (fig. 5).

The main areas of investigation are closely linked to the technological maturity of the tools, infrastructures, and operational practices currently in use. Such technologies – even those coming from different fields (e.g., AR/VR, Digital Fabrication, or Blockchain) – must be sufficiently mature to guarantee short and medium-term effects without running the risk of proposing attractive solutions linked to the “fashions” of the moment, which in practice would require indefinite time for widespread adoption.

Of no less importance are the aspects arising from applying regulations (European, national, regional) and their effects on operational practices. Developing a theoretically high-performance methodology, which contrasts with the current practices, partly defeats the “ready-made” concept that lies at the heart of the research.

4.1 Scope and Survey Campaign

Defining the purpose of data collection is an essential step in planning the field intervention. Knowing the purpose makes it possible to structure the a priori process and define which technologies and methodologies to use, thus setting its budget. The purpose may be purely documentary, or it may be an analytical investigation phase in the broader process involving subsequent intervention actions. Depending on the reasons governing the intervention, it is possible to decide on planning punctual activities (a single survey, more or less integrated) or envisaging medium-long term scheduled activities that return data describing a trend over time (Brusaporci, 2015). Indeed, different types of intervention require specific tools and related acquisition methods.

4.2 Equipment and Methodologies

The tools needed to pursue the research objectives are those effectively collecting information during an integrated digital survey campaign. They are tested and analysed to define which aspects help plan the survey campaign.

The final purpose of the survey defines the whole process from data collection, through processing, to the modelling phase. Therefore, it is possible to say that the purpose of the survey defines the budget (economic and time) allocated to the various work phases at the outset. The use of more accurate instruments, which produce higher quality data and require adequate economies, will only be envisaged for those interventions where they are truly sustainable. On the other hand, other interventions will foresee using less accurate yet exhaustive tools following the purpose envisioned in the planning phase. In this direction, previous experiences of protocols or workflows aimed at guiding the processes of digitization, respecting needs, requirements and specificities of heritage assets to be surveyed have been analysed (Di Giulio et al., 2017).

The instruments foreseen can be divided into dimensional and morphological survey instruments and diagnostic instruments.

Among these technologies, it is possible to list Camera 360, DSLR, SAPR, Traditional direct survey instruments, Digital survey instruments (Disto, Disto 3D), Total station, and Laser scanner.

On the diagnostic side, Georadar, Active sensors (Sensor and data taker), Endoscopy analysis, Thermography, Spectrophotometry, IR images.

The data obtained by these instruments are collected, processed, and stored using hardware and software equipment duly dimensioned in terms of calculation and storage capacity. The final objective of the research is to share the information acquired and conveyed by the synthesis model.

The technologies used are all available and have advanced levels of technological maturity.

4.3 Data Process and Analysis

Once the data collection process has been completed on-site, a preliminary check is carried out to assess the compliance and quality of the material collected. Many unforeseen hardware and software issues can save much time if handled on-site. Today’s digital tools make it possible to check the quality of collected data in real-time and make redundant copies to guarantee its integrity.

At the end of the on-site activities, it is possible to proceed to the data processing and analysis phase. In this phase, paradata are created in addition to the information (Apollonio & Giovannini, 2015). The raw data is processed using specific procedures optimised for each type of data (geometric, two-dimensional, and informative, among others) to obtain ontologically structured information. This process requires a meticulous data storage management structure. At least one unaltered copy of the original data is kept as the data processed in each subsequent stage of the process.

In addition to data in its phase-specific form, the activities carried out by the operator who processed it (paradata) are also recorded. Each information set requires specific software applications, which alter its conformation at each step, to be processed. It may happen during the processing phase to realise that some procedure has been done wrong, and it is not always possible to undo the operations carried out (considering data sets of tens of GB). Therefore, having a copy of the starting data and the record of the carried-out operations is not an excessive scruple but a fundamental requirement. The material collected, processed, and all the information created throughout the process is organised in special storage facilities[9] with a standardised organisational structure. It is accessible and usable by the involved operators and has a certain level of redundancy to guarantee security (local copies for daily operations and cloud for long-term storage).

4.4 Data Aggregation (Modelling)

After the processing phase, the information collected is aggregated and contributes to creating the model. The model is produced according to the purpose and gathers and integrates information into a kind of knowledge tool. The parametric model (Fig. 6), which is created by employing specific commercial applications, makes it possible to aggregate and superimpose sets of information belonging to different disciplines, cataloguing them ontologically (Olawumi & Chan, 2019).

The parametric elements aggregation makes it possible to create a questionable knowledge model, useful both as an information container and a tool for analysing the whole. The model is created to share information among the actors involved in the process (Daniotti et al., 2021). Therefore, it is converted from a proprietary format (specific for each software) into an open format that allows it to be used by all stakeholders.

4.5 Data Sharing

Provided by law as a “common data environment”(CDE/AcDAT), the management system (storage/repository) described above is used to share all available data. The person in charge of data protection will provide the targeted sharing of information since not all data can be freely shared or easily used by anyone. Targeted data sharing is essential when working with a considerable amount of data where there is a risk of unnecessarily overloading the system. It is the responsibility of the administrator of the sharing system to ensure that the dedicated material for each activity is available to the operators. The local sharing system can be hosted by a dedicated workstation or server, adequately set up to perform redundant backups both locally and in the cloud. Data security issues are a topical concern.

5. Conclusions and Further Activities

Data is the most valuable raw material of the future. European projects such as Gaia-X[10] show how data sovereignty will be a commercial battlefield in the not-too-distant future, where Europe (Autolitano & Pawlowska, 2021) owns less than 5% of the companies involved.[11] The data created today are stored in facilities that operate outside the European regulatory framework and over which there is no jurisdiction whatsoever.

Having established these first two points, it is still possible to consider it correct to operate towards digitising processes.

The current state of the construction industry in Italy, which has been in crisis for the last twenty years, does not allow to not take advantage of the digital revolution as a driver for the growth and relaunch of the entire sector.

Developing digital ecosystems, in which all stakeholders in the supply chain (public/private) can work together, acting on a shared model that connects all areas of the construction process, can be a privileged starting point to solve some of the critical issues in the construction process.

The methodology under development is limited to investigating the intervention needs supported by digital data sharing in the built environment. Likewise, it aims to provide operational tools for professionals who will have to evolve in methodology, adopting innovative technologies that allow a competitive advantage in a constantly evolving market.

Future research developments may see employing the created models as tools for collecting data (e.g., energy consumption, maintenance statistics) to be applied to automated management Blockchain protocols (Carson et al., 2018) or as digital bases for the more or less automated integration of construction technologies used in restoration processes based on digital fabrication, in a supply chain/industry 4.0/Supply chain perspective.

Acknowledgements

The research is part of the IDAUP - International Doctorate in Architecture & Urban Planning, 35th cycle, consortium between the University of Ferrara, Department of Architecture, Polis University of Tirana and, as Associate Members, the University of Minho, Guimaraes (Portugal), Slovak University of Technology, Institute of Management, Bratislava (Slovakia) and University of Pécs / Pollack Mihaly Faculty of Engineering and Information Technology (Hungary). The research is being developed thanks to the grant funded by the Emilia-Romagna Region. Call Alte Competenze per la ricerca, il trasferimento tecnologico e l’imprenditorialità (Delibera di Giunta Regionale n. 39 del 14/01/2019), entitled: Application of integrated digital tools for surveying, diagnostics and BIM modelling to support innovation of components and systems, products and services with high added value for the intervention on existing buildings.

Doctoral fellowship approved by the Deliberation of the G.R. n. 462/2019 “Approval of the research training projects presented on the basis of the Call approved by its own resolution n. 39/2019. POR FSE 2014/2020” Ref. PA 2019-11299/RER - CUP F75J19000440009.

The London Charter. Available at: http://www.londoncharter.org/ (Accessed: 14 May 2021).

UNI EN ISO 19650, UNI 11337:2017

Ance, Osservatorio congiunturale sull’industria delle costruzioni – January 2020, www.ance.it

OICE, Annual report on the 2018 BIM competition for public works

The London Charter, version 2.1

Leica BLK2GO

Scenari regionali dell’edilizia, Centro studi ANCE. Available at: https:// www.ance.it (Accessed: 14 May 2021).

Dati statistici per il territorio: Regione Emilia-Romagna (2019) Available at: https://www.istat.it (Accessed: 05 Apr 2021)

IT infrastructure for data collection and management (UNI 11337-5:2017)

GAIA-X - Home. Available at: https://www.data-infrastructure.eu/GAIAX/Navigation/EN/Home/home.html (Accessed: 14 May 2021).

Market Trends: Europe Aims to Achieve Digital Sovereignty With GAIA-X. Gartner. Available at: https://www.gartner.com/en/documents/3988433/market-trends-europe-aims-to-achieve-digital-sovereignty (Accessed: 14 May 2021).